Considerations when using Microsoft NLB with VMware Horizon View

A load balancer is an integral component of (nearly) every VMware Horizon View design. Not only to distribute the connections among a number of connection or security servers, but also to provide high availability in case of a connection or security server failure. Without a load balancer, connection attempts will fail, if a connection or security server isn’t available. Craig Kilborn wrote an excellent article about the different possible designs of load balancing. Craig highlighted Microsoft Network Load Balancing (NLB) as one of the possible ways to implement load balancing. Jason Langer also mentioned Microsoft NLB in his worth reading article “The Good, The Bad, and The Ugly of VMware View Load Balancing”.

Why Microsoft NLB?

Why should I use Microsoft NLB to load balance connections in my VMware Horizon View environment? It’s a question of requirements. If you already have a load balancer (hopefully redundant), then there is no reason to use Microsoft NLB for load balancing. Really no reason? A single load balancer is a single point of failure and therefore you should avoid this. Instead of using a single load balancer, you could use Microsoft NLB. Microsoft NLB is for free, because it’s part of Windows Server. At least two servers can form a highly available load balancer and you can install the NLB role directly onto your Horizon View connection or security servers.

How does it work?

Microsoft Windows NLB is part of the operating system since Windows NT Server. Two or more Windows servers can form a cluster with at one or more virtual ip addresses. Microsoft NLB knows three different operating modes:

- Unicast

- Multicast

- Multicast (IGMP)

Two years ago I wrote an article why unicast mode sucks: Flooded network due HP Networking Switches & Windows NLB. This leads to the recommendation: Always use multicast (IGMP) mode!

Nearly all switches support IGMP Snooping. If not, spend some money on new switches. Let me get this clear: If your switches support IGMP Snooping, enable this for the VLAN to which the cluster nodes are connected. There is no need to configure static multicast mac addresses or dedicated VLANs to avoid flooding.

If you select the multicast (IGMP) mode, each cluster node will periodically send an IGMP join message to the multicast address of the group. This address is always 239.255.x.y, where x and y corresponds to the last two octets of the virtual ip address. Upon receiving these multicast group join messages, the switch can send multicasts only to the ports of the group members. This avoids network flooding. Multicast (IGMP) simplifies the configuration of a Microsoft NLB.

- Enable IGMP Snooping for the VLAN of the cluster nodes

- Enable the NLB role on each server that should participate the cluster

- Create a cluster with multicast (IGMP) mode on the first node

- Join the other nodes

- That’s it!

The installation process of a Microsoft NLB is not particularly complex, and if the NLB cluster is running, there is not much maintenance to do. As already mentioned, you can put the NLB role on each connection or connection server.

What are the caveats?

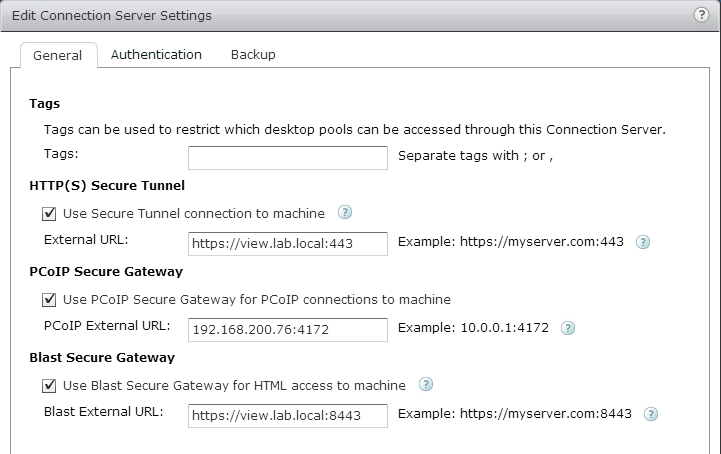

Sounds pretty good, right? But there are some caveat when using Microsoft NLB. Microsoft NLB does not support sticky connections and it does not support service awareness. Why is this a problem? Let’s assume that you have enabled “HTTP(S) Secure Tunnel”, “PCoIP Secure Gateway” and “Blast Secure Gateway”.

Patrick Terlisten/ vcloudnine.de/ Creative Commons CC0

In this case, all connections are proxied through the connection or security servers.

The initial connection from the Horizon View client to the connection or security server is used for authentication and selection of the desired desktop pool or application. This is a HTTPS connection. At this point, the user has no connection to a pool or an application. When the user connects to a desktop pools or application, the client will open a second HTTPS connection. This connection is used to provide a secure tunnel for RDP. Because it’s the same protocol, the connection will be directed to the same connection or security server as before. The same applies to BLAST connections. But if the user connects to a pool via PCoIP, the View client will open a new connection using PCoIP with destination port 4172. If the PCoIP External URL refers to the load balanced URL, the connection can be directed to another connection or security server. If this is the case, the PCoIP connection will fail. This is because the source ip address might be the same, but another destination port is used. VMware describes this behaviour in KB1036376 (Unable to connect to the PCoIP Secure Gateway when using Microsoft NLB Clustering).

Another big caveat is the missing service awareness. Microsoft NLB does not check, if the load balanced service is available. If the load balanced service fails, Microsoft NLB will not stop to redirect requests to the the cluster node that is running the failed service. In this case, the users connection requests will fail.

Still the ugly?

So is Microsoft NLB still the ugly option? I don’t think so. Especially for small deployments, where the customer does not have a load balancer, Microsoft NLB can be a good option. If you want to load balance connection servers, Microsoft NLB can do a great job. In case of load balancing security servers, you should take a look at KB1036376, because you might need at least 3 public IP addresses for NAT. The missing service awareness can be a problem, but you can workaround it with a responsive monitoring.

In the end, it is a question of requirements. If you plan to implement other services that might require a load balancer, like Microsoft Exchange, you should take a look at a redundant, highly available load balancer appliance.