Performance issues on new HW

As part of a project, old server hardware was replaced with shiny new hardware. Beside the server hardware, storage hardware and infrastructure was also replaced. The new hardware was installed beside the old hardware and because the customer has a high virtualization ratio, nearly all servers were VMs and the migration of the VMs was was done without downtime. The customer uses a Windows 2008 R2 failover cluster for file services and MS SQL Server. The MS SQL Server is the database for the ERP software. This cluster used in-guest iSCSI and because of this, we were able to move it online to the new server hardware and migrate the cluster disks later. At a certain point we had the cluster nodes on the new hardware and were able to do a direct comparison in terms of the performance of the new hardware. The runtime of batch jobs and the experience of user showed us, that the hardware was slower. We were puzzled…

The virtualization hardware

CPU ressources were never a problem at this customer, but available memory.

| Server | CPUs | Memory |

|---|---|---|

| HP ProLiant DL360 G7 | 2x Intel Xeon X5650 (Westmere-EP) | 96 GB |

| HP ProLiant DL560 Gen8 | 4x Intel Xeon E5-4603v2 (Ivy Bridge-EP) | 384 GB |

The E5-4603v2 has a slower base frequency and no turbo boost mode. Beside this fact, the E5-4603v2 is “only” a quad core CPU. The reason for switching from dual socket to quad socket was to achieve a higher consolidation ratio at a reasonable price/ performance ratio. It’s like a change from a sports car to a spacious family car.

The observed effects

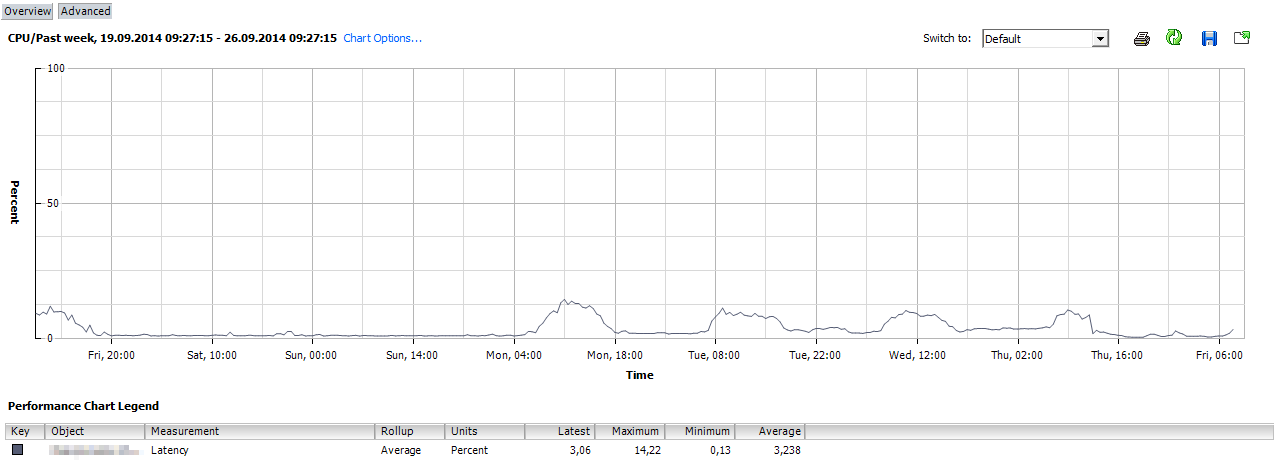

The customer moved both cluster nodes during the night from the old to the new server hardware (thanks to VMware for vMotion without shared storage). The next day we observed, that batch jobs, that were executed on the MS SQL Server, were running slower. In addition to that, users complained about a slightly slower response time while working with the ERP software. While searching for the reason we observed high CPU latencies and %RDY. This is a screenshot for the CPU latencies on the host.

Patrick Terlisten/ vcloudnine.de/ Creative Commons CC0

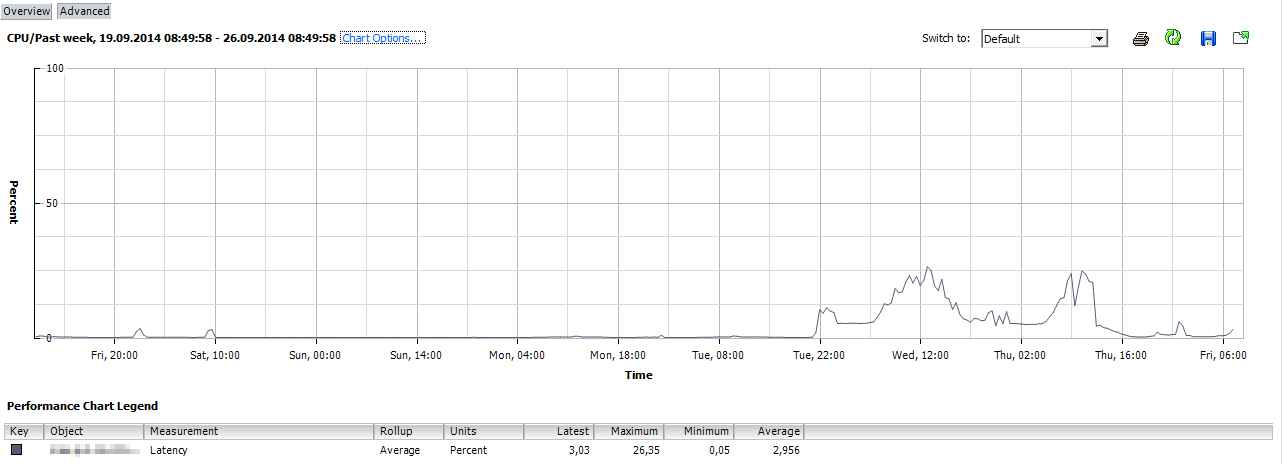

This is a screenshot of the latencies we observed on the VM. As you can see, the latencies started on tuesday at ~ 10 pm. Exactly at this point, the cluster VMs were moved to the new hardware. I must say that the two VMs ran for several days alone on the old hardware, each VM had it’s own physical server.

Patrick Terlisten/ vcloudnine.de/ Creative Commons CC0

Relatively quickly we found the cause for the high latencies: Power saving settings. We switched the Power Regulator Mode in the BIOS to “HP Static High Performance mode” and to “High Performance” in ESXi. The setting in ESXi only works if the power management is set to “OS Control mode”. If you set the BIOS to “HP Static High Performance mode”, then the power management setting in ESXi is without function (thanks to Frank Buechsel for the hint). There is a Support Center article from HP which describes this issue. The article also references to VMware KB1018206. After changing the settings, the latencies were gone, the %RDY lower and the response time were slightly better. Disabling the power management settings was missed during the hardware setup.

The customer and I did some additional tests. We switched the VMs from 6 to 4 vCPUs. This dramatically reduced the %RDY times, but the response time for the users were slightly increased. Then we switched to 8 vCPUs (2 Sockets with 4 Cores). This improved the response time, the %RDY times were still lower compared to the 6 vCPUs setup, but the batch jobs weren’t faster. In fact: The nightly batch jobs benefit from a high clock rate (single thread performance). This was confirmed by the software company that developed the ERP software my customer is running. They also confirmed that parts of the application logic were run in the database. Parts of the ERP software are written in VB6, which has also influence the scalability of the software. The MS SQL Server itself profits from multiple cores. It is a little odd, since the ERP software loads an incredible amount of work on the database server. But hey: I’m not a developer. Tasks like SSIS packages or direct SQL queries are incredibly fast, unfortunately we (currently) can’t see the performance boost in the ERP software. At the end it’s more an architectural problem with the ERP software, than undersized hardware.

Final words

It’s useful to look at the performance requirements in more detail and to talk to the developers, in order to understand the requirements of a particular software. It is important to know whether an application is single-threaded, or whether it benefits from multiple cores. In the worst case, the power dissipated in the application. Because of these requirements, you can then select the appropriate CPUs. Size your virtual machines so they align with physical NUMA boundaries. In my case a VM with 6 vCPUs was moved to pCPUs with 4 Cores per Socket. That wasn’t optimal. The “Performance Best Practices for VMware vSphere 5.5” whitepaper is a good read. If you have to deal with latency-sensitive workloads take a look into the “Best Practices for Performance Tuning of Latency-Sensitive Workloads in vSphere VMs” whitepaper. At the end it’s a question of the budget, whether you can go for more cores, more MHz or both.